Authors: Mathis Pierron, João Guimarães, Zoltan Arvai, Sarah Kennelly

A US media household name, renowned for delivering entertainment and sports content at scale, recognised an opportunity to expand its audience reach and strengthen its online presence as viewer behaviour shifted decisively towards mobile-first and social platforms.

The media company partnered with YLD to support the evolution of their content strategy in order for them to reach a broader audience and deepen viewer engagement across a growing mix of digital channels. We brought our expertise in advanced software engineering, machine learning, and data-driven automation with the company’s content strengths, and enhanced the mobile video experience.

Our collaboration focused on transforming sports and talk show content into mobile-native formats, optimising both viewer engagement and engineering efficiency, and delivering a slick, scalable solution that truly reflects the strengths of both teams.

Keeping viewers hooked with Object and Active Speaker Detection

The first few seconds of social media content are critical. Drop-off rates rise significantly if a video doesn’t immediately deliver what viewers are looking for. To minimise this, a tool that automates and streamlines the transformation of video content into social-media-optimised formats was developed. The tool places the key subject front and centre to capture attention and boost viewer engagement.

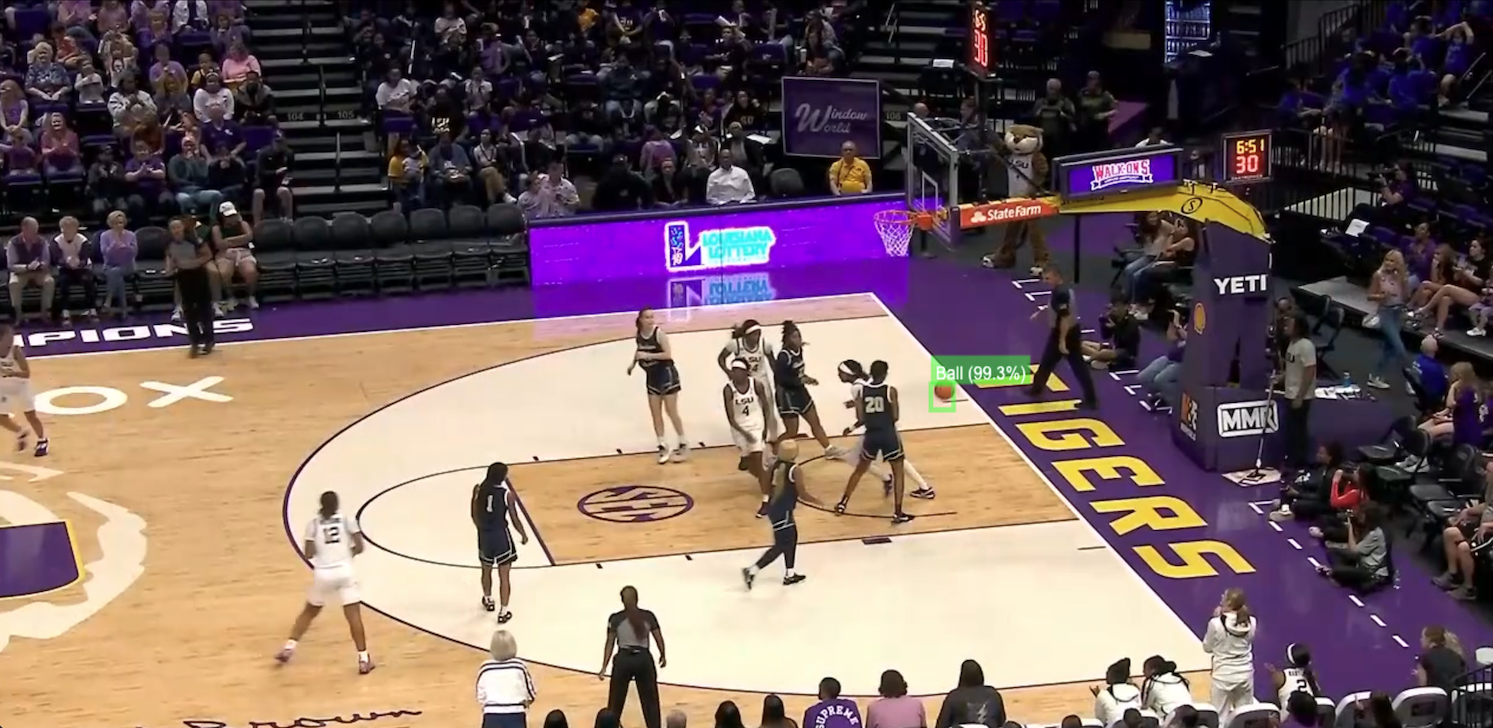

Tracking fast-moving subjects in sports and managing speaker transitions in talk show footage poses significant challenges, from motion blur to visual obstructions. To solve this reliably at scale, we have chosen to use Darknet because of its capability to run exceptionally fast with high precision through YOLO-based detectors. Its real-time object detection capabilities, high throughput, and precision make it a strong fit for automated tracking in dynamic environments, reducing processing overhead while maintaining accuracy across varied use cases.

To improve video framing for mobile and social platforms, we implemented a verticalisation logic that dynamically centres key action in each frame. The system starts by identifying the middle of the frame, then checks each frame for the presence of a ball. If a ball is detected, the crop adjusts to keep it in focus, helping ensure that viewers always see the most important part of the action.

To reduce errors, such as mistakenly tracking the wrong object or missing the actual ball, a filtering mechanism is added. Only detections with at least 80% confidence are considered valid, therefore improving the model’s accuracy and ensuring it continues to follow the correct subject throughout the footage.

The result is a smoother, more engaging video that feels intentional and polished, particularly important for short-form sports content.

To reduce errors in tracking the ball and missing key moments, we have introduced scene detection logic so that detection operates within each scene independently, without being influenced by what happens before or after.

In addition, we then applied ball position interpolation to create a smoother, more professional viewing experience, particularly in fast-paced sports footage. This allowed the camera to follow the ball’s movement fluidly rather than making abrupt adjustments.

For studio-based content like talk shows, the camera must know who is speaking and follow the speaker smoothly. To support this, we have built an Active Speaker Detection (ASD) model with PyTorch, which identifies and tracks the active speaker in real time.

To enhance the viewing experience further, we built a custom post-processing solution that smooths transitions between speakers. This approach prevents abrupt camera shifts and creates a more polished and natural visual flow.

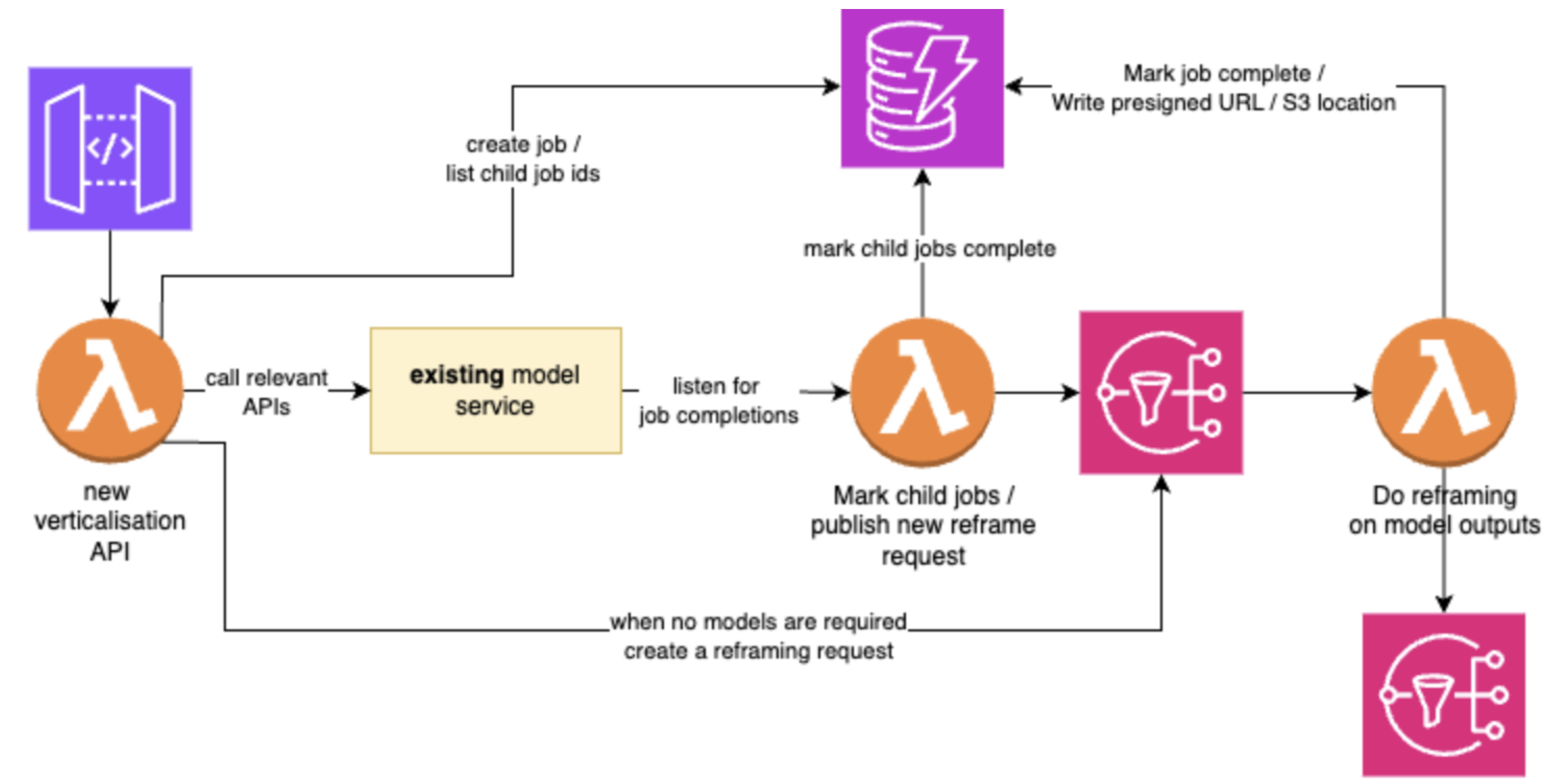

We also developed a robust system where users can upload or select a video, automatically reformat it for vertical viewing by focusing on the speaker, and access the optimised content with ease. The workflow is managed by AWS Step Functions, which runs each task and sends Slack alerts if anything goes wrong, so issues can be quickly fixed.

Security and networking are managed through AWS Lambda and API Gateway, ensuring authenticated access and streamlined operations. File uploads are handled with S3, while EC2 is used only for processing the most compute-intensive tasks, helping to reduce costs and improve scalability.

These technical decisions and architecture provide the media company with a faster, more reliable way to produce high-quality mobile-first content, all while improving operational efficiency and user satisfaction.

To further boost viewer engagement, Saliency Maps powered by the SUM model were utilised to automatically highlight the most visually important parts of each video frame. This allowed for teams to optimise video cropping precisely where viewers’ attention is strongest, which is crucial during the first 15 to 30 seconds when social media audiences decide whether to keep watching.

To automatically highlight the most visually important parts of each video frame and boost viewer engagement, we used Saliency Maps through the SUM model, which enables teams to optimise video cropping precisely based on where viewers attention is strongest. This approach is crucial, as the first 15–30 seconds on social media often determine whether the audience will keep watching or drop off.

Aside from boosting engagement, Saliency Maps provide valuable insights into the model’s decision-making process. This transparency helps teams refine content presentation strategies over time, leading to more effective and viewer-focused outputs. We’ve also used modular, open-source tools to build a solution that balances accuracy with cost-efficiency, keeping the camera focused on what matters most without adding complexity for the engineering team and unnecessary cost for the organisation.

This combination of smart automation and strategic insight helps the media company maximise impact while maintaining operational agility.

Accelerating video turnaround from months to weeks

Looking into the media company’s extensive video archive, converting the vast amounts of videos into vertical, social-media-friendly formats would be a task that could have taken months to complete. The time and effort required for this process risked slowing content delivery and reducing the flexibility in responding to a fast-paced, competitive market.

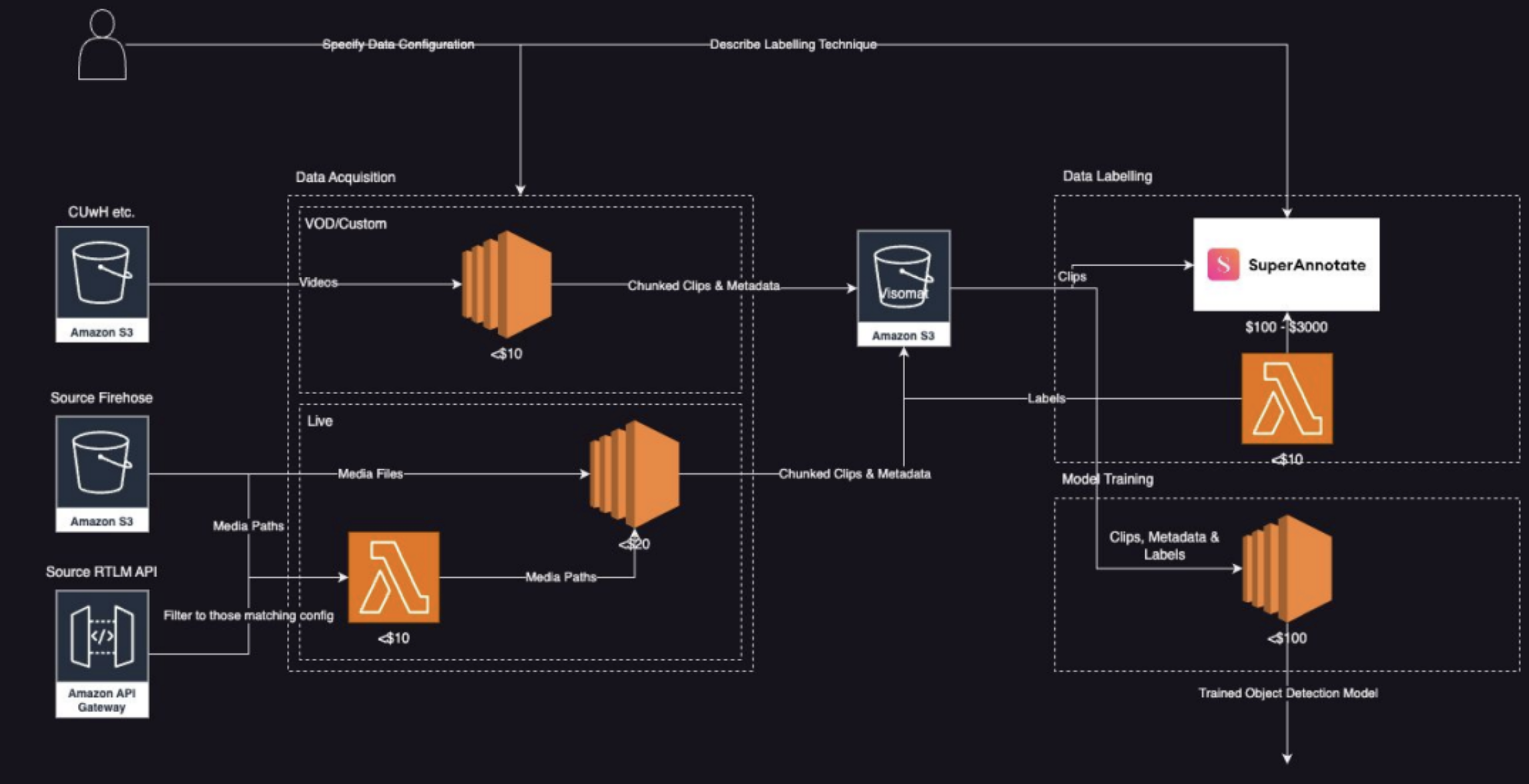

To tackle this implication on engineering operations, we've built an AI-driven system that can automate the entire video conversion process. The model can handle everything from gathering data and labelling to training models and deployment, with very little human input required.

Using this model, we’ve been able to drop turnaround times from months to just a few weeks. This scalable solution speeds up content delivery, reduces operational costs, and frees up the media company’s teams to focus on strategic priorities like monetisation, personalisation, and innovation.

Powering the media company’s vision with scalable technology and full ownership

The media company launched a standalone consumer app to meet the rising demand for mobile-first video consumption. The app delivers a personalised, scrollable feed featuring both long-form and short-form content. At its core is a real-time recommendations engine that adapts dynamically to user behaviour, continuously evolving based on individual viewing patterns to drive engagement and retention, especially for short-form videos.

The media company and YLD continue to collaborate further to iterate on the proprietary video verticalisation model, bespoke built from the ground up using open source models that have already been trained on their custom data. Unlike off-the-shelf tools, this solution grants the media company full ownership, enabling faster iteration, deeper customisation, and strategic control over its content pipeline.

Through close collaboration, we’ve transformed what was originally a manual process by developing an asynchronous API that automatically verticalised over 500 of the media company’s videos in just a few hours. Its impact has prompted the media company’s other engineering teams to explore integration opportunities, given how the model outperforms commercial alternatives in terms of accuracy, flexibility, and adaptability.

This approach not only supports immediate delivery goals but also positions the media company to lead the future of mobile content delivery, with technology it fully owns and can shape on its own terms.